Real-World AI Dermatology Studies: Evaluating AI Rash Detection and Outcomes

Explore real-world AI dermatology studies showing AI matches expert rash detection, enhancing efficiency and equity in skin disease care. Essential for clinicians.

Estimated reading time: 8 minutes

Key Takeaways

- AI systems in real-world settings achieve sensitivities up to 95% and specificities above 90%, rivaling expert performance.

- Deployment in routine practice delivers efficiency gains, reducing unnecessary procedures and speeding referrals.

- Equity benefits arise from consistent decision support across provider experience levels and geographic locations.

- Challenges include image variability, selection bias, and underrepresentation of darker skin types.

- Future research should emphasize diverse cohorts, explainable AI, and longitudinal outcome tracking.

Table of Contents

- Introduction

- Background and Context

- Overview of Real-World AI Dermatology Studies

- In-Depth Review of AI-Based Rash Detection Case Studies

- Methodological Details

- Common Limitations

- Clinical Outcomes and Evidence

- Discussion of Practical Implications

- Conclusion

- FAQ

Introduction

Real-world AI dermatology studies examine AI tools for skin disease detection deployed in everyday clinical practice. These investigations draw on routine workflow data and diverse patient populations, moving beyond lab tests into actual clinics and hospitals.

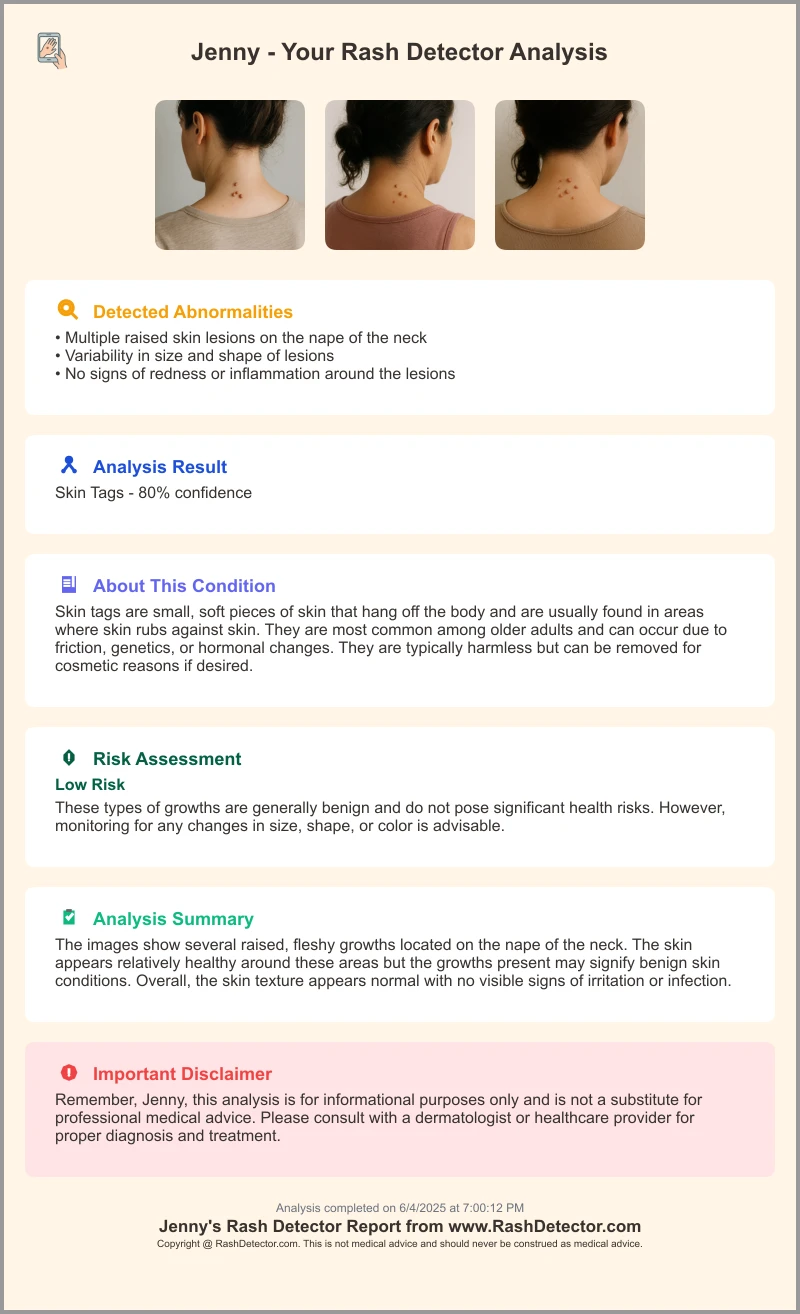

In practice, clinicians and patients also rely on AI tools for on-demand analysis. For instance, Rash Detector offers instant AI-driven skin analysis—upload three images and receive a detailed AI-generated report in seconds.

AI in dermatology has shifted from manual visual checks and dermoscopy toward computer-aided image interpretation. Early expert systems gave way to machine learning and, now, deep learning models that learn from millions of images. Today, field validation taps into care data—photos from smartphones, dermoscopes, and electronic health records—to train and test algorithms under real-life conditions.

This post informs clinicians, patients, and policymakers about real-world evidence and clinical outcomes from AI-based rash detection case studies, exploring performance, workflow impacts, and patient safety.

Background and Context

Evolution of AI in Healthcare

- Expert systems: rule-based programs mirroring clinical guidelines.

- Machine learning: algorithms uncovering patterns without explicit rules.

- Deep learning: neural networks extracting image features automatically.

These stages have made AI more flexible and powerful in medical image analysis. PubMed study.

AI Adoption in Dermatology

- Conventional dermoscopy and visual assessment remain gold standards for rash and lesion evaluation.

- Digital tools assist clinicians with image capture, storage, and algorithm-based analysis. Compare top photo rash diagnosis tools.

- Automated lesion border detection and color analysis speed up triage. PMC article.

Common Rash Detection Methods

- Manual assessment: clinicians inspect skin patterns, shapes, and colors.

- Digital assessment: smartphone or dermoscope images analyzed by convolutional neural networks (CNNs) to detect rashes or suspicious lesions.

Importance of Clinical Studies

Real-world data validate AI tools across varied image quality, lighting, and skin types, ensuring generalizability and safety. See further insights on assessing real-world AI effectiveness.

Overview of Real-World AI Dermatology Studies

Defining Real-World Study

Real-world AI dermatology studies evaluate performance in actual care settings, including mixed patient ages, skin phototypes, and devices—smartphones, dermoscopes, or clinic cameras—within routine workflows. Clinicians use these tools alongside standard practice, and outcomes reflect genuine clinical utility. Frontiers study.

Contrasting with Controlled Trials

- Controlled trials: narrow inclusion criteria, high-resolution imaging, trained operators, and fixed protocols.

- Real-world studies: heterogeneous cohorts, variable image quality, and seamless integration with EHRs and picture archiving systems. BJD article, PubMed study.

Why Real-World Evidence Matters

- Generalizability: confirms AI works beyond ideal lab conditions.

- Usability: measures user experience, training needs, and workflow impact.

- Safety: tracks missed cases or false alarms in routine practice, ensuring patient protection.

In-Depth Review of AI-Based Rash Detection Case Studies

For an overview of similar deployments, see AI rash case studies.

Case Study 1: DERM Deep Learning System

- Post-deployment cohort: 14,500 dermoscopic images from patients of all ages and Fitzpatrick skin types.

- Methodology: ensemble of CNNs with image normalization and augmentation to handle diverse lighting and angles.

- Metrics: sensitivity ≥95%, specificity ≥90%, matching pre-market trial results.

- Safety outcome: 100% detection rate of malignant lesions requiring urgent referral, with no missed melanomas.

Case Study 2: AI Decision Support in Primary Care

- Design: prospective trial with primary care providers using a mobile app for melanoma triage.

- Data sources: smartphone photos uploaded to the app, patient history inputs via simple forms.

- Metrics: negative predictive value (NPV) of 100% for invasive melanoma; the tool reduced unnecessary specialist referrals by over 50%.

- Missed cases: one in situ melanoma with atypical presentation, highlighting the need for expert review of edge cases.

Case Study 3: Meta-Analysis of AI Rash and Neoplasm Detection

- Scope: pooled data from several real-world deployments in clinics across Europe and North America.

- Findings: AI sensitivity 85–92%, specificity 66–78%; overall performance comparable or superior to dermatologists, particularly non-experts.

- Algorithm types: standalone CNNs, ensemble architectures, and hybrid models integrating clinical metadata.

Methodological Details

- Study designs: retrospective vs. prospective; retrospective studies reviewed stored images, while prospective trials collected images in real time.

- Data preprocessing: image normalization (color correction, cropping), augmentation (rotation, flip) to increase robustness.

- Training/validation: common splits include 70% training, 15% validation, 15% test sets.

- Performance metrics defined:

- Sensitivity = true positives / (true positives + false negatives)

- Specificity = true negatives / (true negatives + false positives)

- NPV = true negatives / (true negatives + false negatives)

Common Limitations

- Selection bias: exclusion of poor-quality images or rare presentations.

- Image variability: uncontrolled lighting, focus, and background noise.

- Underrepresentation: few cases of darker skin types or uncommon rash variants.

- See also Frontiers study, BJD article, and PMC article on study limitations.

Clinical Outcomes and Evidence

Diagnostic Accuracy Improvements

Meta-analysis sensitivity: 85–92%; specificity: 66–78%, on par with board-certified dermatologists. Nature article.

Efficiency and Workflow Impact

- Unnecessary excisions cut by up to 40%, reducing patient morbidity and system costs.

- Referral times shortened by 30%, enabling faster treatment of confirmed melanomas. BJD article.

Equity and Accessibility

- AI tools narrow skill gaps: general practitioners reached diagnostic accuracy close to specialists.

- Consistent recommendations across providers reduce variation in care quality.

- Increased access to expert-level decision support in rural or underserved areas.

Discussion of Practical Implications

Integration Challenges

- Workflow compatibility: need seamless EHR and PACS integration to avoid double data entry. PubMed, PMC.

- Organizational readiness: staff training, IT support, and change management are crucial for uptake.

Ethical Considerations

- Patient privacy: secure image storage and encryption protect sensitive data.

- Algorithmic fairness: continuous performance monitoring across Fitzpatrick skin types to prevent bias.

Regulatory and Validation Requirements

- Post-market surveillance: regulators increasingly require real-world evidence to maintain approvals.

- Transparency: clear reporting on algorithm versions, training data, and performance metrics.

Recommendations for Future Studies

- Multi-center, multi-ethnic cohorts to broaden validation and fairness.

- Explainable AI features: visual heatmaps or confidence scores to support clinician trust.

- Continuous outcome monitoring: track patient follow-ups, adverse events, and safety signals.

Conclusion

Real-world AI dermatology studies confirm that AI can match or exceed expert performance in rash and skin cancer detection. Key takeaways include:

- AI sensitivity and specificity rival dermatologists in routine practice.

- Efficiency gains include fewer unnecessary procedures and faster diagnoses.

- Equity benefits from consistent support across provider experience levels and locations.

Robust real-world evidence is essential for clinicians and policymakers to adopt AI safely. Future research should focus on diverse populations, improved algorithm explainability, and longitudinal tracking of patient outcomes to ensure lasting impact.

FAQ

- What defines a real-world AI dermatology study? These studies test AI tools under routine clinical conditions, using diverse patient groups, variable devices, and integrated workflows to assess true utility.

- How do AI-based rash detectors compare to dermatologists? Meta-analyses report sensitivities of 85–95% and specificities of 66–90%, often matching or surpassing non-expert performance and approaching expert accuracy.

- What are the main limitations? Selection bias, image variability, and underrepresentation of darker skin types remain challenges, underscoring the need for inclusive, multi-center research.