The Ethics of AI Rash Detection: Privacy, Bias, and Safety in Skin Apps

Explore the ethics of AI rash detection, tackling privacy, bias, and patient safety in skin apps, essential for responsible AI dermatological tools.

Estimated reading time: 10 minutes

Key Takeaways

- AI-driven rash detection leverages computer vision and deep learning for rapid skin condition screening.

- Ethical challenges include data privacy, algorithmic bias, informed consent, liability, and access disparities.

- Responsible design demands robust security, diverse datasets, transparent consent, and fairness audits.

- Regulatory frameworks and multi-stakeholder collaboration are vital for patient safety and trust.

- Future trends point to explainable AI, real-world monitoring, and equitable deployment models.

Table of Contents

- Introduction

- Understanding AI-driven Rash Detection

- Ethics of AI Rash Detection: Key Ethical Challenges

- Data Privacy Concerns in Skin Diagnosis Apps

- Algorithmic Bias in AI Rash Detection

- Informed Consent and Transparency in AI Diagnosis

- Ethics of AI Rash Detection: Implications and Risks

- Misdiagnosis Risk with AI-Assisted Diagnosis

- Liability and Accountability in AI Errors

- Socio-Economic Impact and Health Equity

- Ethics of AI Rash Detection: Regulatory and Industry Perspectives

- Current Regulations & WHO Guidelines

- Stakeholder Collaboration and Perspectives

- Frameworks for Ethical Improvement

- Future Trends in Ethics of AI Rash Detection

- Conclusion

- FAQ

The ethics of AI rash detection has come to the forefront as more people rely on smartphone tools for skin health. “Ethics of AI rash detection” refers to the set of moral principles and guidelines that govern the development, deployment, and use of AI systems for identifying dermatological conditions. AI-driven rash detection uses computer vision and deep learning on smartphone images to screen for common and rare skin issues. Skin diagnosis apps let users take photos of their rashes or lesions and get instant feedback.

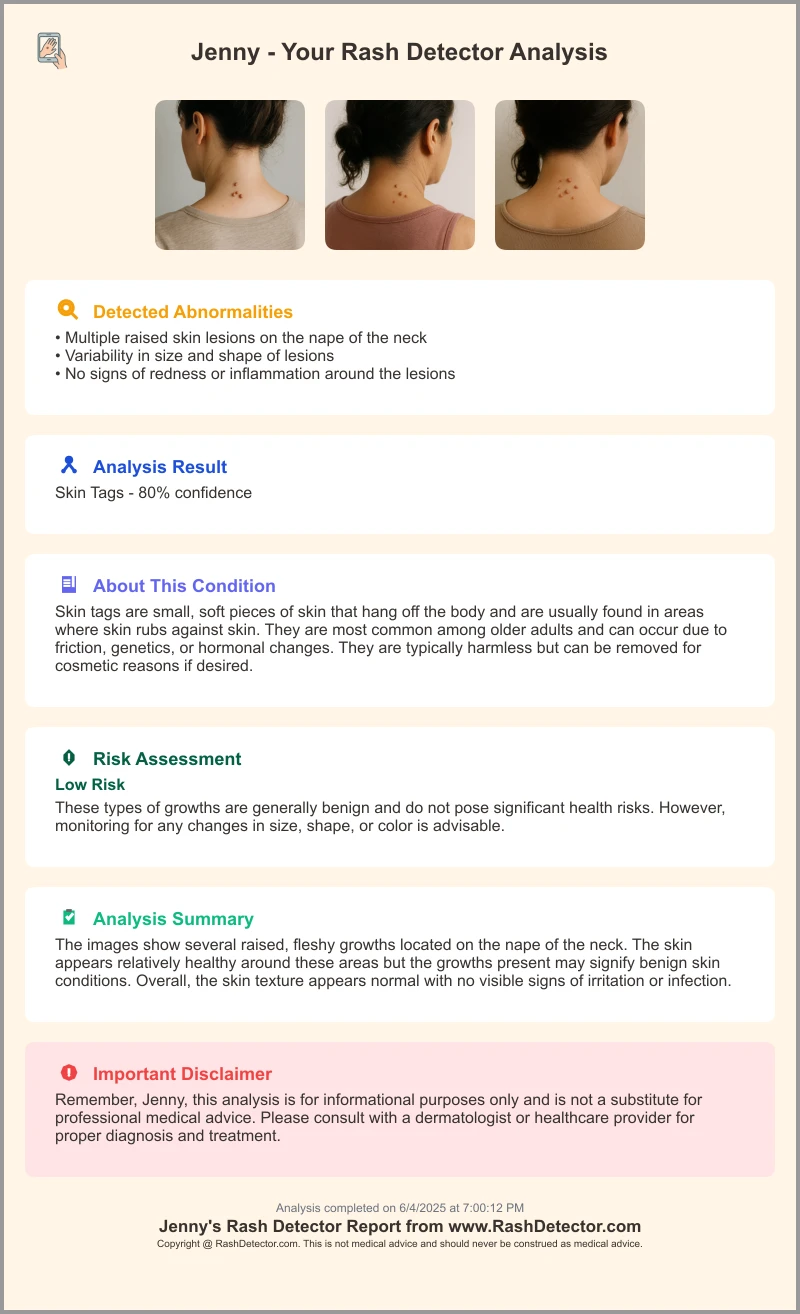

With the rise of AI-based skin analysis platforms, Rash Detector exemplifies how users can upload three images of their rash and receive an instant, detailed report.

This article examines the ethical challenges and implications surrounding AI-driven rash detection and skin diagnosis apps. We explore privacy risks, fairness gaps, consent practices, liability questions, and access barriers, with the goal of mapping out how to build responsible, safe, and equitable AI tools for dermatology care.

AI-driven tools promise greater accessibility and efficiency in dermatological care but introduce complex ethical challenges impacting patient safety, privacy, and equity. Learn more

Increasing use of AI in healthcare underscores the need for robust ethical frameworks. Learn more

Understanding AI-driven Rash Detection

AI-driven rash detection relies on advanced machine learning algorithms that analyze skin images to identify conditions. These systems compare user-submitted photos against large, labeled datasets, often trained by dermatologists on thousands of examples.

- Machine learning algorithms: Supervised deep learning models, especially convolutional neural networks, trained on labeled dermatological image datasets.

- Visual pattern recognition: Models learn color, texture, and shape to flag eczema, psoriasis, fungal infections, and skin cancer.

Recent technological advancements include:

- Deep learning and CNNs for improved lesion detection.

- Computer vision techniques in lesion segmentation and feature extraction.

Examples of popular apps and tools:

- SkinVision: Smartphone app for lesion risk assessment using CNN models.

- Aysa: AI-based tool offering instant feedback on rashes.

- DermAI: Integrated teledermatology software for clinical use.

Diagnostic accuracy comparisons suggest that AI-driven rash detection can match or exceed general physicians for certain conditions, but careful oversight remains essential.

Ethics of AI Rash Detection: Key Ethical Challenges

Data Privacy Concerns in Skin Diagnosis Apps

Data privacy in AI diagnosis involves the secure collection, storage, and processing of personal health information and skin images, which may reveal identifiable features.

Risks:

- Unauthorized access to images and health data.

- Data breaches exposing medical records and personal details.

- Secondary use of images (e.g., research or marketing) without explicit consent.

Best practices:

- End-to-end encryption of data in transit and at rest.

- Anonymization or pseudonymization of images and metadata.

- Clear privacy policies explaining data usage, sharing, and retention.

Ensuring robust data security and clear communication of data usage is essential – learn more about privacy concerns in AI rash diagnosis.

Algorithmic Bias in AI Rash Detection

Algorithmic bias arises when an AI model yields systematically inaccurate results for certain demographic groups due to unrepresentative training data.

Implications:

- Lower accuracy for darker skin tones or rare conditions.

- Exacerbation of health disparities in underrepresented communities.

- Loss of trust among marginalized users.

Mitigation strategies:

- Build diverse datasets that include multiple skin types, ages, genders, and rare rashes.

- Employ fairness metrics (e.g., equal false-positive rates across groups).

- Conduct regular bias audits and retrain models when disparities emerge.

Addressing bias is critical to equitable care – see strategies for mitigating bias in AI rash detection.

Informed Consent and Transparency in AI Diagnosis

Informed consent means users fully understand the AI’s role, the data collected, and their rights to opt out. Transparency requires plain-language disclosures and explainable AI interfaces.

- Clear, user-friendly consent portals that outline how the AI works.

- Explainable AI dashboards showing why a rash was flagged.

- Plain-language summaries and FAQs about risks and benefits.

Ethics of AI Rash Detection: Implications and Risks

Misdiagnosis Risk with AI-Assisted Diagnosis

Over-reliance on AI outputs can lead to overlooked clinical judgment:

- Patients may skip in-person exams after a low-risk score.

- Clinicians might defer too much to AI flags, missing subtle cues.

Misdiagnosis risk increases without proper medical oversight – for a deeper look at reliability and safety, see is AI rash detection safe.

Recommendation: Implement dual-review systems—AI assist plus clinician validation before final decisions.

Liability and Accountability in AI Errors

When AI errs in diagnosis, responsibility can fall on developers, healthcare providers, or data handlers. Existing legal frameworks often lag behind technological advances.

- Vague lines of responsibility can delay patient recourse.

- Shared liability models propose that app makers and clinicians share risk.

Professional guidelines should outline duties in AI-augmented care to clarify accountability.

Socio-Economic Impact and Health Equity

AI tools can improve access in underserved areas but may widen disparities if only available to those who can pay.

- Commercial apps often require subscription fees or pay-per-use models.

- Low-income or rural populations may lack devices or data plans.

Recommendations: Deploy sliding-scale pricing, open-source models, and NGO partnerships to distribute devices and training.

Ethics of AI Rash Detection: Regulatory and Industry Perspectives

Current Regulations & WHO Guidelines

- WHO’s ethical principles for AI in healthcare: beneficence, justice, transparency, autonomy, accountability. Source: WHO ethical principles for AI in healthcare

- HITRUST AI Assurance framework: risk management for AI systems. Source: HITRUST AI Assurance framework

Stakeholder Collaboration and Perspectives

- Tech developers emphasize innovation, rigorous validation, and user experience.

- Clinicians prioritize patient safety, workflow integration, and clinical evidence.

- Ethicists call for inclusive governance, ongoing oversight, and community input.

Frameworks for Ethical Improvement

- Multi-disciplinary ethics boards to review AI tools continuously.

- Continuous model monitoring with public performance reporting.

- Pilot programs testing explainable AI interfaces in clinical settings.

Future Trends in Ethics of AI Rash Detection

- More representative and diverse training datasets to reduce bias.

- Advances in explainable AI and user-centric model interpretability.

- Real-world impact studies to track outcomes and unintended consequences.

- Regular reviews of consent processes, data governance, and security standards.

Balancing innovation with patient safety and autonomy will define the future of AI dermatology.

Conclusion

AI-driven rash detection offers faster, broader dermatology access but raises ethical concerns around data privacy, algorithmic bias, informed consent, liability, and equity. Building robust, multi-stakeholder ethical frameworks—including pilot ethics audits, expanded dataset initiatives, and collaborative governance—is essential to ensure safe, equitable, and transparent skin diagnosis for all.

FAQ

- How is user privacy protected in AI rash detection apps?

These apps employ end-to-end encryption, anonymization of images, and clear privacy policies to safeguard personal health data.

- What steps are taken to reduce algorithmic bias?

Developers build diverse datasets, apply fairness metrics, and conduct regular bias audits to ensure equitable performance across demographics.

- Who is liable if the AI makes a misdiagnosis?

Liability often involves shared responsibility between app developers and healthcare providers, guided by professional and legal frameworks.

- How do users give informed consent?

Consent portals and explainable AI dashboards inform users about data usage, AI roles, and opt-out options in clear language.

- What future oversight measures are recommended?

Continuous model monitoring, public performance reporting, and multi-disciplinary ethics boards help maintain trust and safety.