Understanding and Mitigating Bias in AI Rash Detection: Ensuring Fair and Equitable Dermatology Tools

Explore how bias in AI rash detection affects diagnosis and treatment, and learn strategies to ensure fair and equitable dermatology tools for diverse populations.

Estimated reading time: 8 minutes

Key Takeaways

- Bias in AI rash detection can lead to misdiagnoses and delayed treatments for underrepresented skin tones.

- Non-representative data, inconsistent labeling, and feature selection skew AI dermatology tools toward certain demographics.

- Unaddressed bias erodes trust, exacerbates health disparities, and compromises patient outcomes.

- Continuous monitoring, representative sampling, and clear fairness metrics are essential for reliable models.

- Collaboration among clinicians, developers, and regulators fosters ethical, equitable AI in dermatology.

Table of Contents

- Introduction

- Understanding Bias in AI

- Dermatology AI Bias: Impact on Rash Detection

- Investigative Analysis: AI Bias Metrics

- Strategies for Fair AI Dermatology Diagnosis

- Future Directions for Ethical AI Dermatology

- Spotlight on Practical AI Tools

- Conclusion

- FAQ

Introduction

Bias in AI rash detection emerges when systematic, unfair differences occur in how AI models classify skin rashes across patient groups. This often stems from non-representative data or flawed model design that skews results. Fair and accurate AI dermatology tools are crucial for timely, reliable diagnoses and ensuring all patients receive equitable care. Without these safeguards, marginalized populations may face misdiagnoses or treatment delays, widening health disparities. Ultimately, biased AI can erode trust in medical systems and harm patient outcomes. Source: PMC article on AI bias in dermatology

Understanding Bias in AI

*Bias in AI rash detection* refers to systematic differences in model predictions for distinct demographic groups, while *AI dermatology tools* should aim for universal accuracy. In machine learning, bias arises from skewed training data, subjective labeling, or algorithmic decisions—manifesting when outputs are consistently more accurate for one subgroup than another.

Sources of bias in AI dermatology tools:

- Data Collection

– Training sets lack representative images across Fitzpatrick skin types I–VI, ages, and genders, limiting generalizability.

– Models may memorize lighter skin presentations and underperform on darker tones. - Labeling & Annotation

– Human annotators apply inconsistent standards or let unconscious biases affect labels, introducing errors.

– Mislabeling conditions on underrepresented groups skews training signals. - Feature Selection

– Developers may prioritize features (e.g., redness intensity) that appear differently across skin tones.

– The model overlooks subtle cues in pigmented skin, reducing sensitivity. - Algorithm & Deployment

– Feedback loops reinforce historical healthcare biases when models are retrained on their own outputs.

– Disadvantaged groups suffer cumulative errors and lower quality of care.

Source: Onix Systems blog on AI bias detection and mitigation

Dermatology AI Bias: Impact on Rash Detection

When bias in AI rash detection manifests, performance drops on darker skin tones, leading to misdiagnosis or missed diagnosis—and thus unequal treatment and delayed care.

Misdiagnosis and Unequal Treatment

- Rashes that present differently on pigmented skin (e.g., erythema appearing as hyperpigmentation) may be overlooked or misclassified.

- Delayed identification of conditions like eczema, psoriasis, or Lyme disease increases risk of complications.

- Marginalized patients may receive incorrect prescriptions or unnecessary referrals.

Consequences for Marginalized Groups

- Exacerbation of existing health disparities due to late or missed diagnoses.

- Erosion of patient trust in AI systems and healthcare providers.

- Increased safety risks when critical conditions remain untreated.

- Negative outcomes that disproportionately affect underrepresented populations.

Investigative Analysis: AI Bias Metrics

Current research highlights the need for continuous monitoring and external validation of AI rash detection models on diverse cohorts. Best practices involve quantitative metrics, transparency, and process checks to combat bias in AI rash detection.

Current Research Emphasis

- Continual Surveillance: Ongoing performance tracking to spot drift in subgroup accuracy.

- Recalibration: Adjusting models with fresh, representative data periodically.

- External Validation: Testing on independent datasets covering all Fitzpatrick skin types.

Source: PMC article on AI bias in dermatology

Bias-Handling Methods in AI Dermatology Tools

- Representative Sampling vs. Synthetic Augmentation

- Integrated Fairness Checks

- Transparency in Training Data Composition

Metrics and Evaluation Criteria for Bias Detection

- Error Rate Differentials (Δ False Negative Rate and Δ False Positive Rate across subgroups)

- Sensitivity & Specificity by Fitzpatrick category, gender, and age

- Fairness Metrics: demographic parity, equalized odds, calibration within groups

Learn specific approaches to improve AI rash detection accuracy.

Strategies for Fair AI Dermatology Diagnosis

- Diversify Datasets: Systematically collect images spanning Fitzpatrick I–VI, all ages, genders, and ethnicities.

- Inclusive Design & Labeling: Engage multidisciplinary annotation teams, hold consensus-building sessions, and include cultural competence training.

- Regular Bias Audits: Schedule quarterly subgroup performance reviews with alert thresholds and corrective protocols.

- Policy & Industry Standards: Advocate transparent model documentation and third-party fairness evaluations.

Discover how AI dermatology for diverse populations is reshaping inclusive care and advances in inclusive skin health technology.

Future Directions for Ethical AI Dermatology

Bias in AI rash detection must evolve through collaboration among clinicians, developers, and regulators to remain fair and trustworthy.

- For Clinicians: Request demographic performance reports, monitor outcomes, and use AI as support, not sole decision-maker.

- For Developers: Integrate fairness objectives into loss functions, publish data summaries, and use differential privacy.

- For Regulators: Mandate pre-market bias mitigation plans, require post-market surveillance, and establish independent certification.

Spotlight on Practical AI Tools

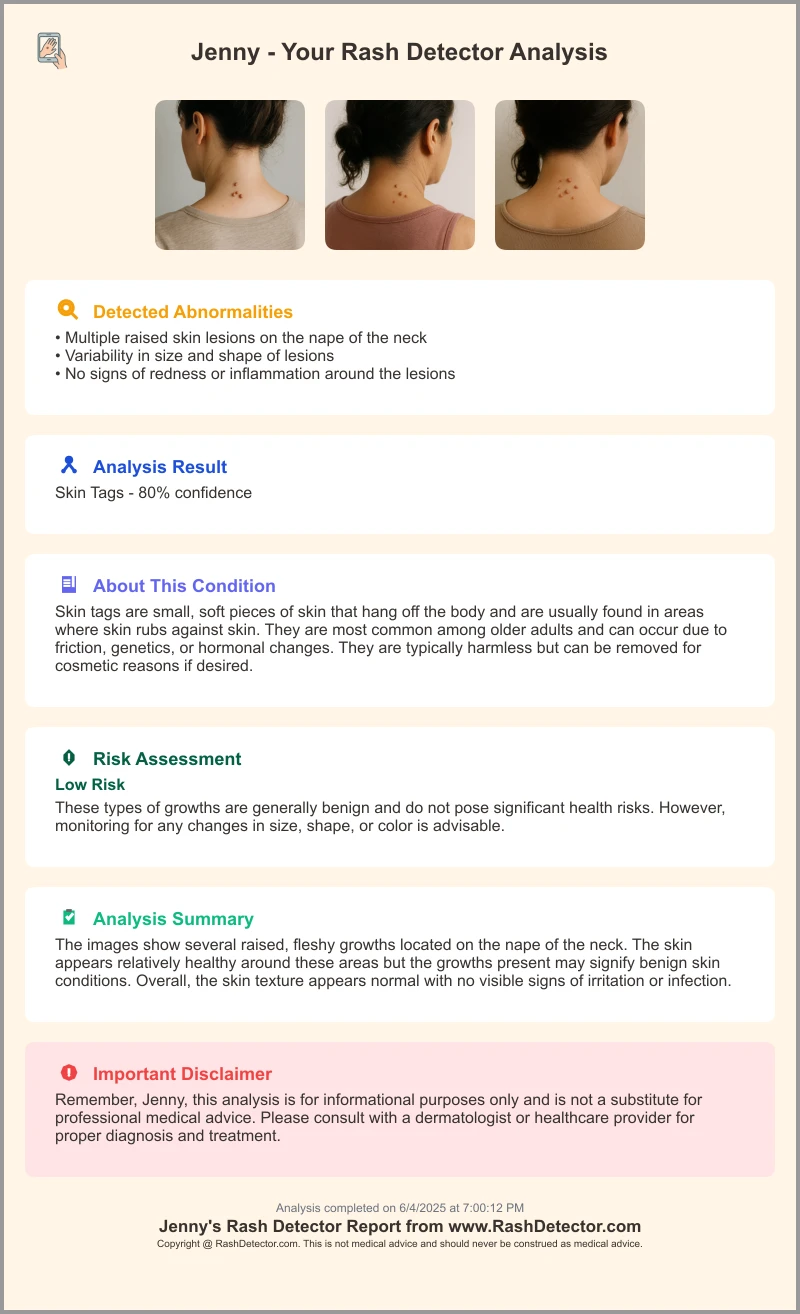

Tools like Rash Detector offer instant AI skin analysis by uploading three images for a rapid, impartial report. Below is a sample report illustrating key confidence scores and risk assessments:

Conclusion

Bias in AI rash detection presents a clear threat to equitable healthcare, disproportionately affecting underrepresented populations. We examined sources of bias—from data collection to deployment—and explored its impacts on diagnosis and trust. Systemic mitigation strategies, including dataset diversification, inclusive labeling, bias audits, and transparent documentation, are essential. Stakeholders across clinical, technical, and regulatory domains must commit to fairness, transparency, and ongoing improvement. Through collaboration and further research, AI rash detection tools can serve all patient populations equitably and enhance dermatology care for everyone.

FAQ

- What causes bias in AI rash detection?

Bias often stems from non-representative datasets, inconsistent annotation, and algorithmic design choices that favor certain demographic groups. - How can developers detect bias in their models?

By implementing continuous performance monitoring, subgroup evaluation with fairness metrics, and external validation on diverse cohorts. - What steps ensure fair AI dermatology tools?

Diversify training data, conduct regular bias audits, include multidisciplinary annotators, and adhere to transparent industry standards. - Why is external validation important?

It verifies model performance on independent datasets, ensuring accuracy across all skin types, ages, and genders. - Who should be involved in bias mitigation?

Clinicians, data scientists, ethicists, and regulators must collaborate to design, audit, and certify fair AI systems.