Navigating Privacy Concerns with AI Rash Diagnosis

Explore privacy concerns with AI rash diagnosis, data security, and best practices to protect personal health information while using AI health apps.

Estimated reading time: 8 minutes

Key Takeaways

- AI rash diagnosis leverages deep learning to deliver instant skin assessments but relies on sensitive user images and metadata.

- Primary privacy concerns include data collection, storage, sharing, and risks of breaches or unauthorized use.

- Robust security measures—encryption, anonymization, access controls—are essential to maintain user trust.

- Compliance with GDPR, HIPAA, and other regulations is crucial, though gaps in oversight still exist.

- Adopting privacy-by-design, transparent policies, and informed user practices helps safeguard personal health data.

Table of Contents

- Section 1: Understanding AI Rash Diagnosis and Privacy Concerns

- Section 2: Identifying Privacy Concerns

- Section 3: Data Security and User Trust

- Section 4: Regulatory and Legal Considerations

- Section 5: Best Practices for Protecting User Privacy

- Conclusion

Section 1: Understanding AI Rash Diagnosis and Privacy Concerns

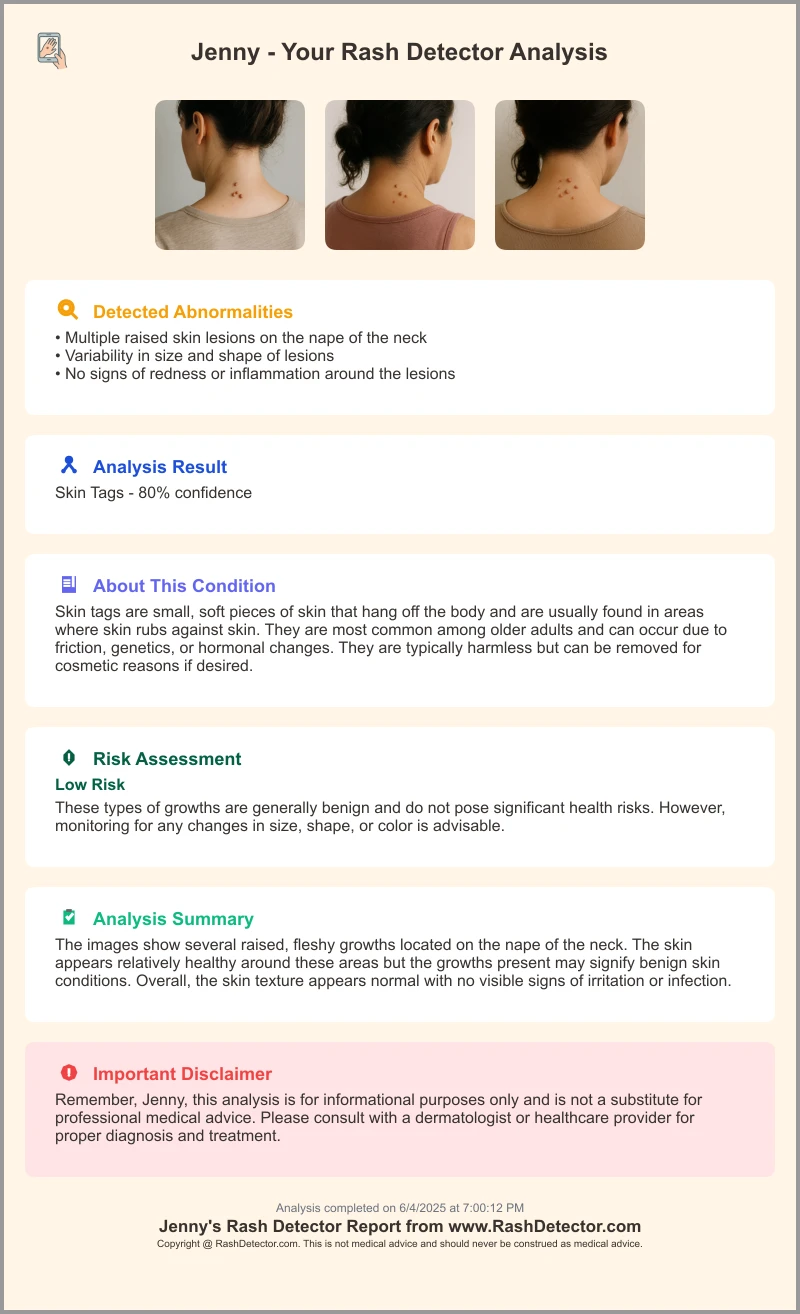

AI-powered rash diagnosis uses machine learning models—often convolutional neural networks—trained on millions of dermatological images to classify skin conditions. In seconds, these tools analyze user-uploaded photos and metadata to suggest possible diagnoses or prompt clinical follow-up.

When users trust apps with intimate health data, transparency and security become paramount. According to ECRI’s 2025 health hazards list, AI in healthcare tops the technology risk chart, highlighting both its promise and its data-security pitfalls.

- Photo upload: A user captures or selects a high-resolution rash image.

- Image analysis: The AI spots patterns—color changes, texture, size—using deep learning.

- Diagnosis suggestion: The app returns a likely condition or advises seeing a clinician.

Benefits of AI rash diagnosis:

- Early detection of serious skin conditions like melanoma or dermatitis.

- Faster, user-friendly assessments without a clinic wait.

- Personalized treatment pathways based on user history and image analysis.

For instance, some apps flag suspicious moles early, prompting timely doctor visits. A recent review investigates machine learning in skin disease identification, showcasing the accuracy driven by vast image libraries and constant updates.

Section 2: Identifying Privacy Concerns

AI’s power comes from data—but that data can create risk. Key privacy concerns include:

A. Data Collection

- High-resolution rash images plus metadata (age, gender, location, medical history).

- Detailed information improves AI accuracy but heightens personal exposure.

B. Data Storage & Sharing

- Cloud storage often holds images on remote servers for faster processing.

- Third-party integrations may share data for research or marketing.

- Insecure servers or weak APIs can expose protected health information (PHI).

C. Potential Risks

- Data breaches: Exposed PHI can lead to identity theft.

- Unauthorized resale: Data might be sold to advertisers or insurers for profiling.

- Cyberattacks: Ransomware can lock or leak sensitive health repositories.

Even apps not regulated as “medical devices” can hold highly sensitive data. Users may not know who sees their images or how long they’re retained.

Section 3: Data Security and User Trust

Strong data security is the backbone of user trust. When people feel safe, they’re more likely to engage and share vital information. Key measures include:

- End-to-end encryption: Use TLS/SSL in transit and AES-256 at rest.

- Data anonymization & pseudonymization: Remove direct identifiers to minimize re-identification risk.

- Access controls & audit logs: Implement role-based permissions and track PHI access.

- Transparency: Publish clear statements on data collection, storage, and sharing practices.

- Bias testing: Regularly evaluate AI models to prevent misdiagnosis and ensure fair treatment across all skin types.

For deeper insights, see our deep dive post or compare different AI rash tools and their privacy features.

Consumers can try lightweight AI analysis in apps like Rash Detector, which provides instant, privacy-aware skin assessments.

Section 4: Regulatory and Legal Considerations

AI rash apps navigate a complex web of regulations. Major frameworks include:

GDPR Overview

- Explicit consent: EU users must actively agree to data collection.

- Data minimization: Collect only what is strictly necessary.

- User rights: Access, correct, or delete personal data on demand.

HIPAA Overview

- Covered entities: Healthcare providers and insurers managing e-PHI.

- Business associates: Third parties handling e-PHI on behalf of covered entities.

- Security standards: Administrative, physical, and technical safeguards for e-PHI.

Regulatory Gaps

- Medical device classification: Many AI rash tools avoid strict review.

- Cross-border data flows: EU–US data sharing triggers multiple jurisdictions.

- Emerging tech: AI advances may outpace regulations, leaving privacy loopholes.

Section 5: Best Practices for Protecting User Privacy

Developers & Companies

- Encrypt data in transit (TLS/SSL) and at rest (AES-256).

- Apply data-minimization: collect only essential images and metadata.

- Embed privacy-by-design: opt-in consent flows and pseudonymization.

- Publish clear, plain-language privacy policies detailing data use and retention.

- Conduct regular security audits, penetration tests, and bias assessments.

End Users

- Review app permissions; limit camera and location access if not critical.

- Choose apps compliant with GDPR, HIPAA, or equivalent local regulations.

- Avoid uploading identifiable facial images; crop or mask non-involved areas.

- Monitor breach news; revoke permissions or delete accounts if policies change.

Tip: Always read the privacy policy before granting access. A transparent policy signals a commitment to data protection.

Conclusion

AI rash diagnosis brings fast, accessible skin-health insights—but it also raises serious privacy concerns around image and health-data handling. Robust security measures, transparent practices, and clear regulations are essential to protect users. By working together—developers, regulators, and end users can ensure that innovation does not come at the cost of personal privacy.

Call to Action

- Developers: Adopt privacy-by-design and stay updated on security best practices.

- Regulators: Close gaps and provide clear guidance for AI health apps.

- Users: Demand transparency, verify compliance, and stay informed.

FAQ

- What personal data do AI rash diagnosis apps collect?

- They typically collect high-resolution rash images plus metadata like age, gender, location, and medical history to improve diagnostic accuracy.

- How is my data protected in these apps?

- Secure apps use end-to-end encryption (TLS/SSL in transit, AES-256 at rest), data anonymization, role-based access controls, and regular security audits.

- Are AI rash diagnosis apps regulated?

- Apps may fall under GDPR or HIPAA if they process personal health information, but many tools avoid strict medical device classification, creating regulatory gaps.

- What can I do to safeguard my privacy?

- Review app permissions, choose compliant apps, crop identifiable areas, read privacy policies, and delete data or accounts if policies change.