Ethical Issues in Skin Diagnosis Apps: Navigating AI-Driven Rash Detection Challenges

Explore ethical issues in skin diagnosis apps, focusing on privacy, bias, and transparency, to protect patient welfare and maintain trust in AI-powered dermatology.

Estimated reading time: 8 minutes

Key Takeaways

- AI-driven skin apps raise critical ethical concerns around privacy, bias, transparency, liability, and regulation.

- Protecting sensitive data and ensuring algorithmic fairness are essential for diverse patient care.

- Explainable models and clinician oversight foster trust and accountability.

- Robust regulatory frameworks and multi-stakeholder collaboration are needed for safe deployment.

- Adopt best practices: encryption, diverse datasets, regular audits, and clear user education.

Table of Contents

- Overview of AI-Driven Skin Diagnosis Apps

- Identification of Ethical Issues

- Data Privacy and Security

- Algorithmic Bias and Accuracy

- Transparency and Accountability

- Healthcare Integration Implications

- Regulatory and Oversight Challenges

- Potential Solutions and Best Practices

- Case Studies and Real-World Examples

- Conclusion

1. Overview of AI-Driven Skin Diagnosis Apps

Skin diagnosis apps leverage machine learning to analyze user-submitted images of rashes, lesions, or spots. By employing computer vision and pattern recognition, these tools suggest possible conditions based on models trained on millions of labeled dermatological images. They offer:

- Enhanced accessibility in regions lacking dermatologists

- Potential cost savings versus in-person consultations

- Convenient early screening that may prompt timely medical follow-up

2. Identification of Ethical Issues

2.1 Data Privacy and Security

Handling sensitive skin images demands airtight safeguards. Unique features—like moles or scars—can make de-identification challenging.

Key Concerns

- Re-identification risk despite anonymization techniques

- Users may not fully understand image storage and sharing practices

- Some jurisdictions allow processing without explicit consent

Protect patient privacy with end-to-end encryption, strict access controls, and transparent data-use policies (see secure data handling).

2.2 Algorithmic Bias and Accuracy

Bias arises when training datasets underrepresent certain skin tones. Underperformance on darker skin leads to unequal diagnostic outcomes.

Accuracy Risks

- False positives causing unnecessary alarm

- False negatives leading to delayed or missed diagnoses

- Some developers acknowledge bias but fail to correct or disclose it

Mitigate bias with diverse, representative datasets and rigorous validation (see strategies to mitigate algorithmic bias).

2.3 Transparency and Accountability

Opaque models erode trust among users and clinicians. Explainability and documented audit trails are vital.

Shared Duty of Care

- Developers must design explainable, validated models

- Clinicians share legal and ethical responsibility for app-based results

- Insufficient risk communication further undermines accountability

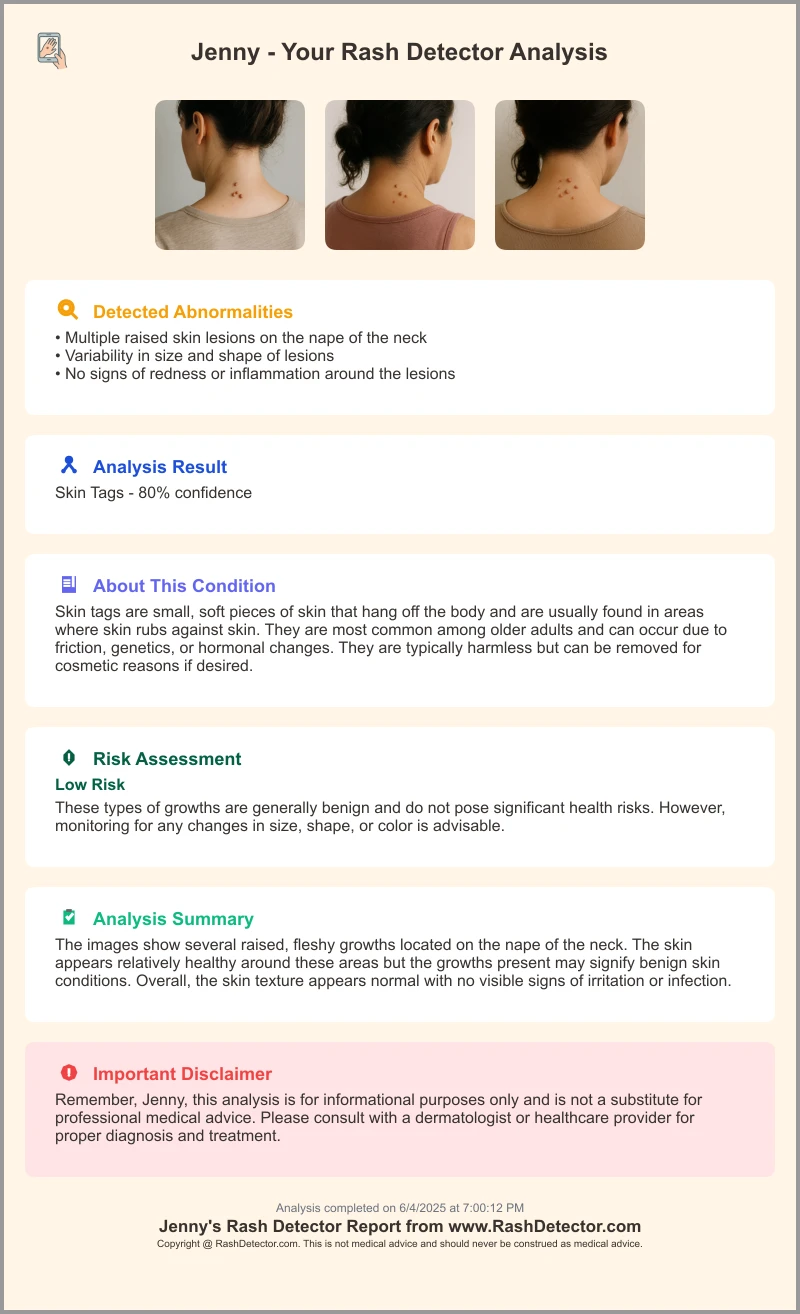

For example, the Rash Detector Skin Rash App provides anonymized sample reports with clear risk levels and confidence scores:

3. Healthcare Integration Implications

AI should augment—not replace—professional expertise. Tools can flag suspicious lesions, but final interpretation must rest with qualified clinicians.

Liability Complexities

- Legal precedents for AI-related misdiagnoses are evolving

- Developers and providers may both be held accountable

- Clear guidelines on shared liability are lacking

Maintain trust through transparent communication about AI’s role and limitations (see blending AI reports with care).

4. Regulatory and Oversight Challenges

Regulation often lags behind AI innovation. Fragmented oversight leads to inconsistent safety and efficacy standards.

Key Gaps

- No unified guidelines for validating ML in healthcare

- Variable requirements for clinical trials and post-market surveillance

- Limited involvement of ethics experts in regulatory review

A multi-stakeholder approach—including regulators, ethicists, clinicians, and patient advocates—is essential for cohesive standards.

5. Potential Solutions and Best Practices

Robust safeguards and ethical frameworks can mitigate AI risks in dermatology.

Recommended Measures

- Stricter Data Protection

- End-to-end encryption and least-privilege access

- Comprehensive consent with clear disclosures

- Diversify Training Datasets

- Include varied skin tones, ages, and ethnic groups

- Partner with global dermatology networks

- Regular Algorithm Audits

- Periodic reviews for accuracy, fairness, explainability

- Protocols for error detection and user notification

- User Education

- Transparent information on app capabilities and limits

- In-app tutorials on image capture and privacy settings

6. Case Studies and Real-World Examples

UK Comparative Study

A 2023 analysis of popular skin cancer apps revealed wide disparities in transparency, performance reporting, and bias mitigation—underscoring the need for clear metrics.

Hypothetical Scenario

An app undertrained on melanin-rich images misses early melanoma signs in a patient with darker skin, delaying treatment and illustrating real harm from bias.

Regulatory Gaps in Action

Several apps entered emerging markets without independent clinical validation. Post-market reviews later uncovered safety issues, prompting recalls.

Conclusion

The ethical challenges in AI-driven skin diagnosis span privacy, bias, transparency, liability, and regulation. Continuous evaluation, interdisciplinary collaboration, and robust oversight are crucial to protect patient welfare and preserve trust. Stakeholders must work together to ensure that AI reshapes healthcare responsibly.

FAQ

- What are the main ethical risks of skin diagnosis apps? Data privacy breaches, algorithmic bias against certain skin tones, opaque decision-making, and unclear liability.

- How can developers reduce algorithmic bias? By training models on diverse datasets, conducting rigorous validation across demographics, and transparently reporting performance metrics.

- Why is transparency important in AI diagnostics? Explainable AI fosters user and clinician trust, facilitates accountability, and helps clarify limitations.

- Who is liable if an AI tool misdiagnoses? Liability can fall on both developers and healthcare providers; clear legal frameworks are still emerging.

- What regulatory measures are needed? Unified guidelines for clinical validation, post-market surveillance, and inclusion of ethics experts in oversight.