Investigating Racial Bias in Skin AI for Dermatological Diagnosis

Explore the challenges of racial bias in skin AI, its impact on dermatological diagnosis, and strategies to ensure fair care for all skin tones.

Estimated reading time: 12 minutes

Key Takeaways

- Data Imbalance: Underrepresentation of darker skin tones in training sets leads to misdiagnoses.

- Algorithmic Opacity: “Black box” models make bias detection and correction difficult.

- Clinical Impact: Reduced accuracy on darker skin exacerbates health disparities and erodes trust.

- Mitigation Strategies: Diversify datasets, deploy explainable AI, enforce inclusive testing, and strengthen regulation.

Table of Contents

- Background on AI in Dermatology

- Practical Example of AI-driven Analysis

- Sources of Racial Bias in AI Systems

- Investigative Findings on Skin AI Bias

- Implications for Patient Care

- Strategies to Mitigate Racial Bias

- Future Directions and Recommendations

- Conclusion

- FAQ

Background on AI in Dermatology

AI in dermatology harnesses machine learning and deep learning to support or automate diagnosis. Viewed through the lens of racial bias in skin AI, these tools must be evaluated for fairness and accuracy.

What Is Machine Learning?

- Models are trained on labeled skin-image datasets.

- They learn patterns matching conditions like melanoma.

What Is Deep Learning?

- Multi-layer neural networks extract features—shape, color, texture—automatically.

- Detection accuracy improves over iterative training cycles.

Common AI Workflow

- Image capture – clinical photos or smartphone images.

- Preprocessing – standardizing size, lighting, and contrast.

- Feature extraction – highlighting lesion borders and color variations.

- Classification – assigning probabilities and suggesting diagnoses.

For a deeper look at rash diagnosis, see our overview of AI-driven rash detection.

Prevalence in Clinical Settings

- Melanoma screening apps triage suspicious moles before in-person visits.

- Teledermatology services use AI to speed remote consultations.

- Educational simulators train students with AI-annotated cases.

Key studies include the PubMed study and the Stanford HAI analysis.

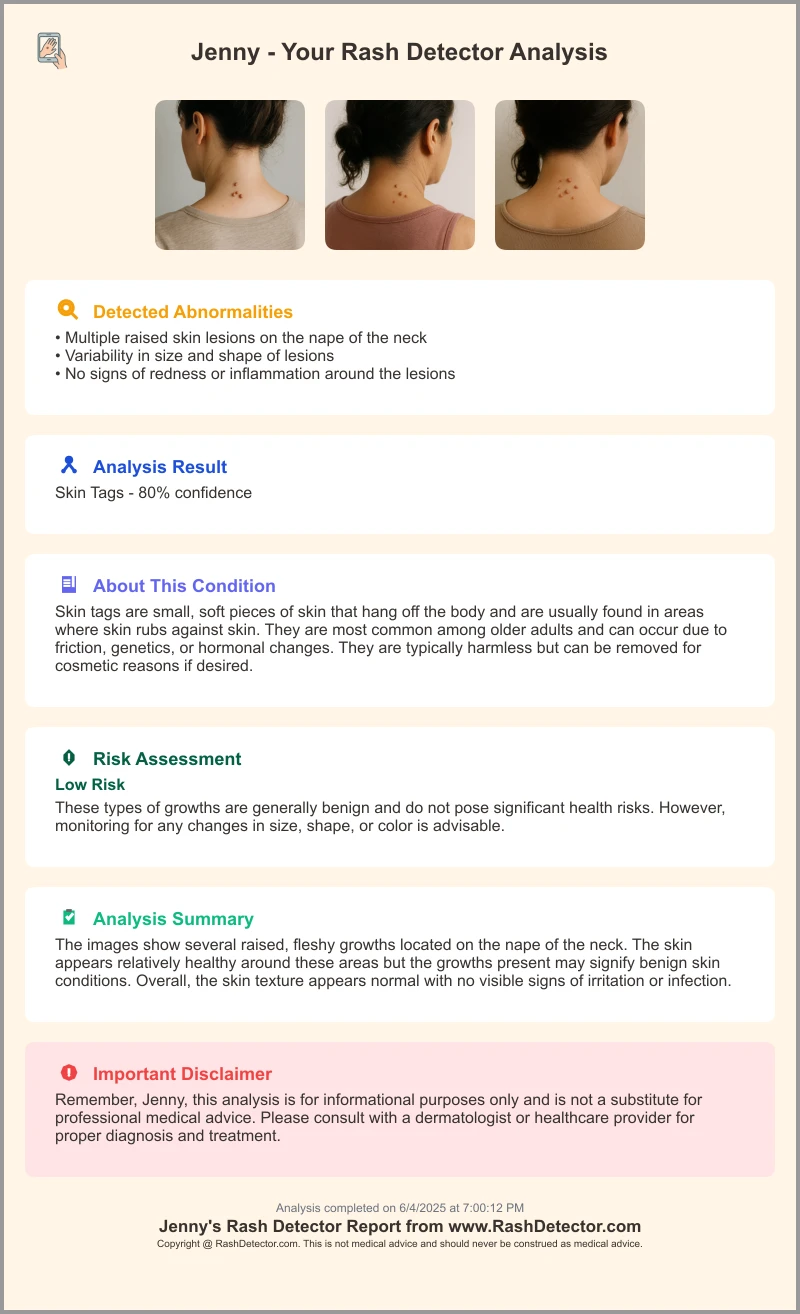

Practical Example of AI-driven Analysis

Here’s a sample report from a leading Skin Rash App:

Sources of Racial Bias in AI Systems

Bias in AI refers to systematic errors that skew predictions and disadvantage certain groups. When underrepresented populations face consistent misclassification, it's a clear example of racial bias in skin AI.

Key Definitions

- Bias in AI: Any error pattern resulting in unfair outcomes.

- Racial bias: Performance drops for specific racial or ethnic groups due to training gaps.

Common Sources of Bias

- Dataset Imbalance

- Dark skin tones are underrepresented; one review found only 11 of 106,000 images showed dark skin.

- Source: RCSI SMJ report

- Algorithmic Shortcomings

- Proprietary “black box” models hide decision logic, complicating bias detection.

- Source: ProBiologists article

- Annotation Bias

- Human labelers may lack exposure to diverse skin types, embedding errors at the ground truth level.

- Source: Stanford HAI analysis

Investigative Findings on Skin AI Bias

This section examines studies and real-world examples showing how racial bias in skin AI manifests and persists:

- Data Underrepresentation: Only 10% of AI dermatology studies report skin tone; of 106,000 images, just 11 depicted darker skin. (RCSI SMJ)

- Performance Disparities: Top models lost up to 30% accuracy on dark skin images in the Diverse Dermatology Imaging dataset. (PubMed)

- Google DermAssist: Initial data had only 2.7% darkest Fitzpatrick types; post-critique, datasets were enriched and performance published by skin type. (RCSI SMJ)

- Educational Gaps: AI audits found fewer than 5% of dermatology textbook images showed dark skin lesions. (Stanford HAI)

- Clinician Reliance: Less-experienced physicians depended heavily on AI recommendations, yet “fair AI” still didn’t fully close accuracy gaps for dark skin. (Northwestern study)

Implications for Patient Care

When AI tools underperform on darker skin, patient outcomes and trust are at risk:

- Misdiagnosis & Delayed Treatment: False negatives for melanoma are higher in dark-skinned patients, delaying critical care. (RCSI SMJ)

- Health Disparities: Melanoma survival is 66% in Black patients vs. 90% in non-Hispanic White patients, driven by delayed diagnoses. (RCSI SMJ)

- Erosion of Trust: Repeated misreads by AI can undermine confidence in digital health services.

Strategies to Mitigate Racial Bias

- Dataset Diversification

- Explainable AI & Transparency

- Use interpretability tools like saliency maps to trace model decisions.

- Publish architectures and performance broken down by demographics. (ProBiologists)

- Inclusive Testing Protocols

- Mandate pre-deployment testing on demographically balanced sets.

- Report sensitivity and specificity by skin tone in regulatory submissions. (PubMed)

- Educational Equity

- Audit and revise teaching materials to include diverse skin presentations.

- Adopt frameworks like STAR-ED for content equity. (Stanford HAI)

- Regulatory & Community Oversight

- Engage policymakers to enforce equity standards and transparency reports.

- Require third-party audits before clinical approval. (ProBiologists)

Explore emerging innovations for equitable care: Inclusive Skin Health Technology.

Future Directions and Recommendations

Collaboration and innovation are key to fair AI for all skin tones.

For Developers

- Conduct regular bias audits and performance reviews.

- Explore synthetic data augmentation for underrepresented skin types. (RCSI SMJ, PubMed)

For Healthcare Professionals

- Curate and share diverse clinical image libraries.

- Critically evaluate AI tools for demographic performance before adoption. (Stanford HAI)

For Policymakers & Regulators

- Enforce mandatory demographic performance reporting in AI approvals.

- Create guidelines for equity benchmarks in dermatology diagnostics. (ProBiologists)

Emerging Innovations

- Multi-stakeholder governance frameworks to balance interests of patients, developers, and regulators.

- Open data consortia and standardized equity metrics to track progress.

Conclusion

Addressing racial bias in skin AI is vital to achieving fair, accurate dermatological diagnosis for every patient. Researchers, developers, clinicians, and regulators share the responsibility to build diverse datasets, transparent algorithms, and inclusive educational resources. Through transparent research, open data sharing, policy advocacy, and ongoing bias monitoring, we can ensure equitable skin health for all.

FAQ

What causes racial bias in skin AI?

The primary drivers are dataset imbalances, algorithmic opacity, and annotation inconsistencies that embed unfair patterns into model predictions.

How can AI developers reduce bias?

By diversifying training data across all skin types, employing explainable AI tools, and conducting rigorous, demographically balanced testing before deployment.

Are there regulations for equitable AI in dermatology?

Regulatory bodies are increasingly requiring demographic performance reporting, transparency disclosures, and third-party audits prior to clinical approval.