Inaccurate AI Skin Analysis: Causes, Cases, and Solutions

Explore the causes, real-world cases, and solutions to improve inaccurate AI skin analysis, addressing dataset bias and enhancing patient safety.

Estimated reading time: 8 minutes

Key Takeaways

- AI skin analysis can misdiagnose due to dataset bias and poor image quality.

- Opaque “black-box” models erode trust without explainability.

- Human oversight and clinical context are essential safeguards.

- Future success requires diverse data, explainable AI, and robust regulatory standards.

Table of Contents

- Introduction

- Understanding AI Skin Analysis and Inaccurate AI Skin Analysis

- Challenges Leading to Inaccurate AI Skin Analysis

- Real-World Implications and Case Studies of Inaccurate AI Skin Analysis

- Challenges in Diagnosing Rashes Using Inaccurate AI Skin Analysis

- Balancing AI Assistance with Medical Expertise for Inaccurate AI Skin Analysis

- Future Directions and Improvements in Inaccurate AI Skin Analysis

- Conclusion

- FAQ

Introduction

Inaccurate AI skin analysis refers to the growing concern around using image processing and machine learning—often convolutional neural networks—to assess dermatological conditions from photographs. With teledermatology tools and mobile apps being adopted rapidly (for an in-depth look), reports of misdiagnoses raise patient safety concerns. In this post, we will explore causes of inaccuracy, examine real-world case studies, and discuss solutions to improve overall skin diagnostics.

“AI analysis must be paired with clinical judgment to ensure patient safety.”

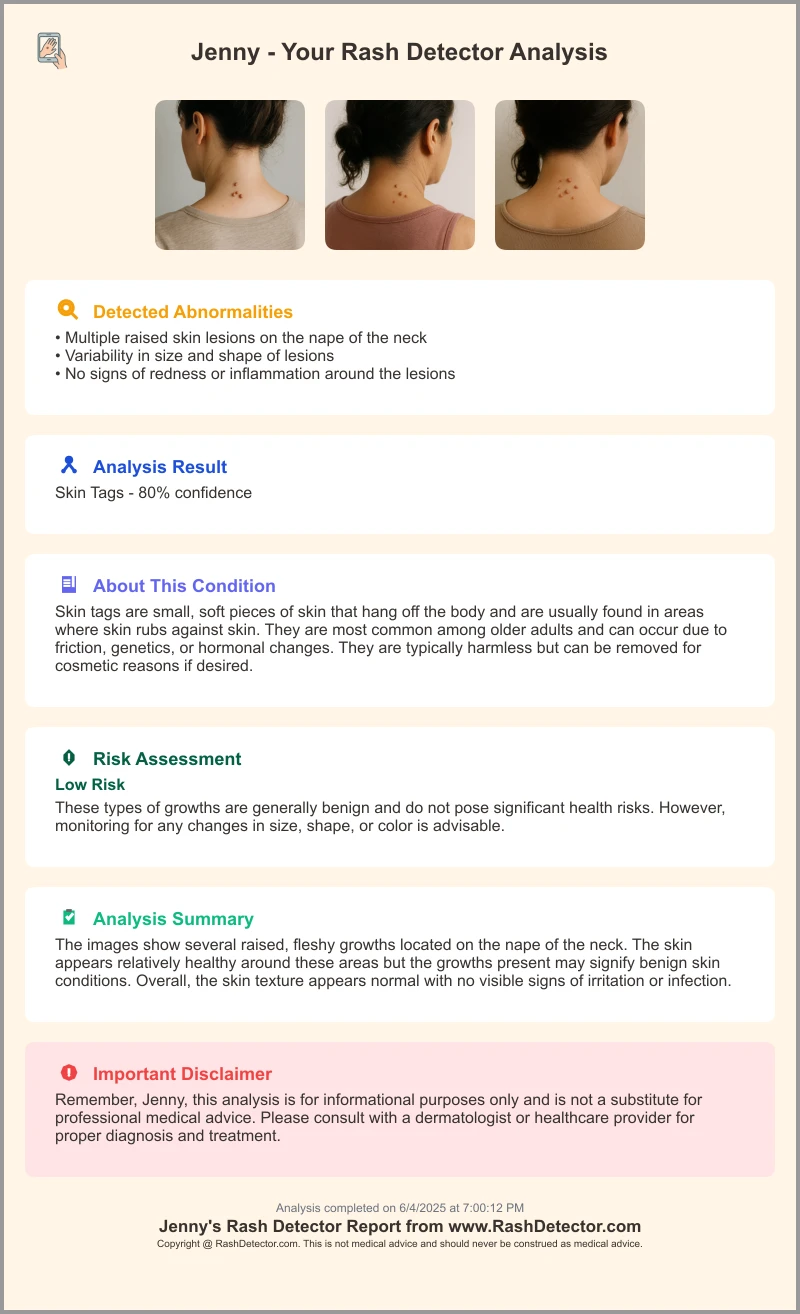

To explore practical AI assessment, Rash Detector lets users upload three images and receive an instant, AI-powered sample report.

Understanding AI Skin Analysis and Inaccurate AI Skin Analysis

Core Technology

- Convolutional Neural Networks (CNNs): Detect visual features—starting with pixel-level redness, graduating to lesion shapes, and ending with disease classification.

- Deep Learning Methods: Extract textures and patterns using filters that adapt during training to recognize skin conditions (for a technical overview).

Data Inputs and Training

- Labeled Skin Images: Thousands of annotated photos showing acne, eczema, psoriasis, and skin cancer.

- Patient Metadata: Age, gender, Fitzpatrick skin type included to improve context.

- Datasets:

- Public Repositories: Open-access images with variable labeling quality.

- Proprietary Clinical Databases: Richer metadata but limited diversity and transparency.

Benefits vs. Inherent Challenges

- Benefits: Rapid triage, scalable screening, and performance metrics rivaling board-certified dermatologists.

- Challenges: Device variability, regulatory transparency, and clinician trust in opaque “black box” models.

Challenges Leading to Inaccurate AI Skin Analysis

Dataset Limitations and Bias

- Under-representation: Most datasets focus on Fitzpatrick I–III skin types, causing error rates to spike in IV–VI.

- Rare Conditions: Limited images of atypical rashes or rare malignancies lead to misclassification.

Image Quality Factors

- Lighting Variations: Harsh shadows or uneven light alter color readings.

- Camera Angle & Resolution: Skewed angles distort lesion shape; low megapixels miss fine details.

- Recommendations: Use ≥12 MP cameras and standardized diffuse lighting protocols.

Algorithmic Complexity and Transparency

- Black-Box Models: Conceal decision logic, undermining clinician trust and regulatory approval.

- Explainable AI: Saliency maps and heatmaps reveal regions influencing diagnosis, boosting transparency.

Lack of Clinical Context

- Isolated Analysis: AI sees only static images, omitting patient history and physical exam details.

- Impact: Differential diagnoses often require context beyond visuals.

Real-World Implications and Case Studies of Inaccurate AI Skin Analysis

Case Study: DermaSensor on Diverse Skin Tones

- FDA-Approved Device: For primary care melanoma screening.

- Findings: High sensitivity but low specificity in darker-skinned participants.

- Implication: Lack of diverse trial enrollment risks overdiagnosis.

Consumer Mobile App Risks

- Inconsistent Accuracy Claims: Vague metrics without peer-reviewed validation.

- Minimal Dermatologist Oversight: Recommendations often unverified.

- Privacy Gaps: Unclear data handling and consent.

Patient Trust and Safety

- Survey Insights: 63% of users unwilling to rely on AI for diagnosis.

- Potential Harms: Delayed treatment, unnecessary anxiety, and resource burden.

Challenges in Diagnosing Rashes Using Inaccurate AI Skin Analysis

- Subtleties and Overlapping Features: Erythema, scaling, and papules that look alike.

- External Factors: Lighting temperature, flash reflections, skin moisture, and image angle confound AI.

- Rare Conditions: Scarce training examples lead to default misclassification of atypical rashes.

Balancing AI Assistance with Medical Expertise for Inaccurate AI Skin Analysis

- Supportive Role: AI outputs as decision-support tools, not replacements for clinical evaluation.

- Review Confidence Scores: Low-confidence outputs warrant biopsy or referral.

- Choose Approved Tools: FDA/CE-cleared apps with published validation studies.

- Informational Use for Patients: Treat AI feedback as preliminary and consult a dermatologist for persistent lesions.

Future Directions and Improvements in Inaccurate AI Skin Analysis

- Data Diversity: Multi-center collaborations covering all Fitzpatrick types and rare conditions.

- Synthetic Augmentation: Generative adversarial networks to simulate underrepresented skin tones and lesions (see synthetic augmentation process).

- Explainable AI & Quality Controls: Real-time alerts for low-quality photos and saliency maps for transparency.

- Rigorous Validation: Standardized metrics and real-world clinical trials.

Conclusion

Inaccurate AI skin analysis arises from dataset bias, image quality issues, algorithmic opacity, and lack of clinical context. While AI can accelerate triage and screening, over-reliance can harm patients through misdiagnosis, delays, and unnecessary anxiety. The path forward requires:

- Human Oversight: Always pair AI outputs with expert dermatological assessment.

- Explainable Models: Ensure transparency in decision-making.

- Regulatory Vigilance: Promote standardized validation and diverse dataset requirements.

- Patient Education: Encourage users to treat AI feedback as preliminary.

Support continued research into diverse, explainable AI models. Advocate for clear regulatory frameworks and clinical guidelines to harness AI’s potential for better skin health while safeguarding patient trust and safety against inaccurate AI skin analysis.

FAQ

- Q: Why do AI skin analysis tools misdiagnose?

A: Common causes include dataset bias, poor image quality, and “black box” algorithms lacking transparency. - Q: Can darker skin tones affect accuracy?

A: Yes. Under-representation of Fitzpatrick IV–VI in training data often leads to lower specificity and higher error rates. - Q: How can clinicians mitigate risks?

A: Use AI as a support tool, review confidence scores, and validate results with biopsies or dermatologist referrals. - Q: What improvements are on the horizon?

A: Synthetic data augmentation, explainable AI techniques, diverse multi-center datasets, and stricter regulatory validation.