Improving AI Accuracy with Community Input: A Guide to Better Rash Diagnosis

Learn how improving AI accuracy with community input enhances rash diagnosis by leveraging real-world data and feedback for better precision.

Estimated reading time: 8 minutes

Key Takeaways

- Community-driven data fills gaps in training sets, reducing bias.

- User feedback pinpoints edge cases and misdiagnoses for model refinement.

- Crowdsourcing methods ensure diverse, representative rash images and metadata.

- Structured retraining and privacy-preserving techniques boost reliability.

- Ongoing validation and oversight maintain ethical standards and data quality.

Table of Contents

- 1. Understanding AI Accuracy in Rash Diagnosis

- 2. Role of Community Input

- 3. Leveraging Crowdsourced Data

- 4. Enhancing AI Models Through Community Feedback

- 5. Challenges and Considerations

- 6. Conclusion

- 7. FAQ

1. Understanding AI Accuracy in Rash Diagnosis

AI accuracy, or diagnostic reliability, measures the percentage of correct predictions against clinical ground truth. Precise AI systems require rigorous benchmarks against expert-verified cases. In rash diagnosis, high predictive accuracy is essential because conditions like eczema, psoriasis, and drug reactions can appear similar. A missed diagnosis (false negative) may delay care, while a false positive can trigger unnecessary treatments.

Key challenges in model precision:

- Variability in photo quality: inconsistent lighting, focus, and framing hinder analysis.

- Limited dataset diversity: many training sets under-represent darker skin tones, leading to racial bias.

- Black-box opacity: deep learning models often lack interpretability, making error tracing difficult.

These obstacles underscore the need for community input—by integrating feedback and real-world data, we can fill blind spots and make AI more robust.

Research references:

2. Role of Community Input

Community input comprises three pillars:

- User feedback reports—patients and clinicians report misdiagnoses or edge-case failures.

- Crowdsourced images and symptom logs—diverse users submit rash photos with metadata (age, skin tone, symptoms).

- Published case studies—peer-reviewed or community-curated reports highlight rare presentations.

How these inputs help:

- Reveal real-world misses: patient and practitioner reports surface unseen scenarios.

- Expand demographic representation: crowdsourced submissions reduce racial and age bias.

- Capture rare conditions: drug reactions, tropical diseases, or genetic syndromes often slip through standard datasets.

Examples include a 5,000-photo repository of drug-induced rashes and clinician-curated misclassification reviews. For more on integrating user feedback into dermatology AI tools, see this guide.

3. Leveraging Crowdsourced Data

Collecting high-quality crowdsourced data involves technical and logistical planning:

Data Collection Methods

- Mobile/Web Apps: Guided consent flows capture images, location, age, skin tone, and symptoms.

- Clinic Partnerships: Collaborations with dermatology clinics and advocacy groups ensure validated cases.

Technical Steps

- Standardize image resolution (1024×1024) and file formats (JPEG/PNG).

- Implement consent protocols and anonymization pipelines to comply with HIPAA/GDPR.

- Use annotation platforms where clinicians tag lesions, boundaries, and severity.

Tools and Platforms

- Open-source annotation frameworks (COCO, VIA).

- Secure cloud storage with encryption and audit logs.

- CI pipelines to auto-ingest new submissions, validate metadata, and flag anomalies.

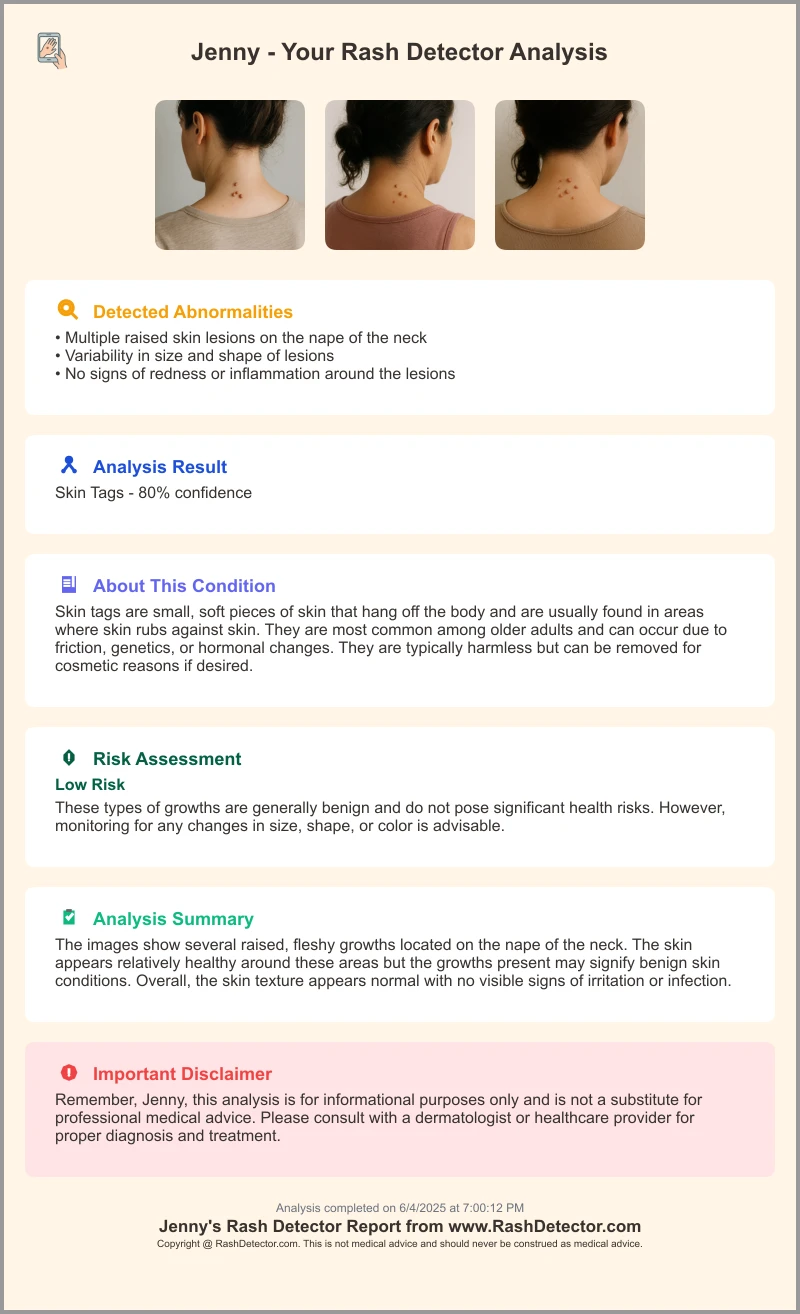

Consumer apps like Rash Detector generate structured reports for retraining cycles.

To explore how crowdsourced skin health data enhances model performance, read more here.

4. Enhancing AI Models Through Community Feedback

A structured feedback-driven refinement process boosts diagnostic reliability:

- Data Cleaning

- Validate labels against clinical ground truth.

- Remove poor-quality images or incorrect metadata.

- Supervised Retraining

- Merge vetted crowdsourced samples with existing data.

- Rebalance classes to avoid overfitting.

- Iterative Testing

- Deploy updated model in a sandbox environment.

- Collect fresh user feedback and click-stream confidence scores.

- Track precision, recall, and F1 score across demographics.

Privacy and Security Best Practices

- Federated Learning: train on-device and aggregate updates without moving raw data.

- Differential Privacy: inject noise into gradients to protect contributions.

- Transparent Policies: opt-in/opt-out and clear retention terms.

Ethical Considerations

- Informed Consent: explain how data informs model improvements.

- Public Documentation: maintain changelogs showing model enhancements.

For guidance on boosting AI accuracy in rash detection, see this resource.

5. Challenges and Considerations

Community-driven approaches yield gains but present pitfalls:

- Inconsistent data quality: varying lighting and focus.

- Demographic skew: urban or tech-savvy users may dominate.

- Privacy risks: improper handling can lead to breaches.

Mitigation Strategies

- Expert Validation Panels: dermatologists audit random samples before integration.

- Automated Bias Detection: tools scan for imbalances and trigger augmentation campaigns.

- Security Audits: regular reviews of pipelines, encryption, and consent management.

- Community Advisory Board: diverse stakeholders ensure accountability.

Ongoing validation and engagement sustain improvements, fostering trust and equity.

6. Conclusion

Community input closes critical gaps in rash diagnosis by blending model precision with real-world insights. When AI engineers, medical experts, and users collaborate, diagnostic tools become more equitable, reliable, and transparent. Ready to help? Share your experience, submit rash images through trusted platforms, or join the Brookings AI for All Communities project. Together, we can drive continuous improvement and ensure safer outcomes for everyone.

FAQ

- What is community input? It includes user reports, crowdsourced images, and case studies that inform AI model improvements.

- How can I contribute my data? Use platforms with guided consent flows—such as Rash Detector—to submit images and metadata securely.

- How is privacy protected? Through anonymization pipelines, federated learning, differential privacy, and transparent consent policies.

- Who reviews the crowdsourced data? Expert panels of dermatologists and trained annotators audit and validate samples before integration.