Fixing App Rash Recognition Errors: A Step-by-Step Guide

Fixing app rash recognition errors ensures accurate AI-driven skin-rash analysis. Improve app reliability with systematic diagnostics and targeted solutions.

Estimated reading time: 8 minutes

Key Takeaways

- Accurate rash recognition is vital for user trust and clinical reliability.

- Common error types include misclassification, detection failures, false positives, and inconsistencies due to image quality.

- Systematic diagnostics—enhanced logging, debugging, user feedback, and benchmarking—help pinpoint root causes.

- Solutions involve enriching datasets, refining algorithms, advanced preprocessing, iterative testing, and code reviews.

- Future-proofing with continuous monitoring, feedback loops, and clinical alignment ensures lasting accuracy.

Table of Contents

- Background & Context

- Identifying the Errors

- Troubleshooting & Diagnostic Steps

- Solutions for Fixing the Errors

- Future-Proofing the Recognition System

- Conclusion

- FAQ

Background & Context: fixing app rash recognition errors

Fixing app rash recognition errors is the process of identifying and correcting failures in AI-driven skin-rash image analysis to ensure accurate results and user trust. It helps apps avoid wrong or missed diagnoses that can harm users or damage credibility. Accurate rash recognition is critical for user satisfaction, clinical reliability, and overall app functionality. According to the American Academy of Dermatology, digital health tools must maintain high accuracy to build and retain trust in patient communities and health professionals. When apps err, users can get false reassurance, undue anxiety, or poor treatment advice—issues documented by Aysa’s team. In this guide, we walk through four key phases: identifying common errors, diagnostic steps, targeted solutions, and future-proofing strategies.

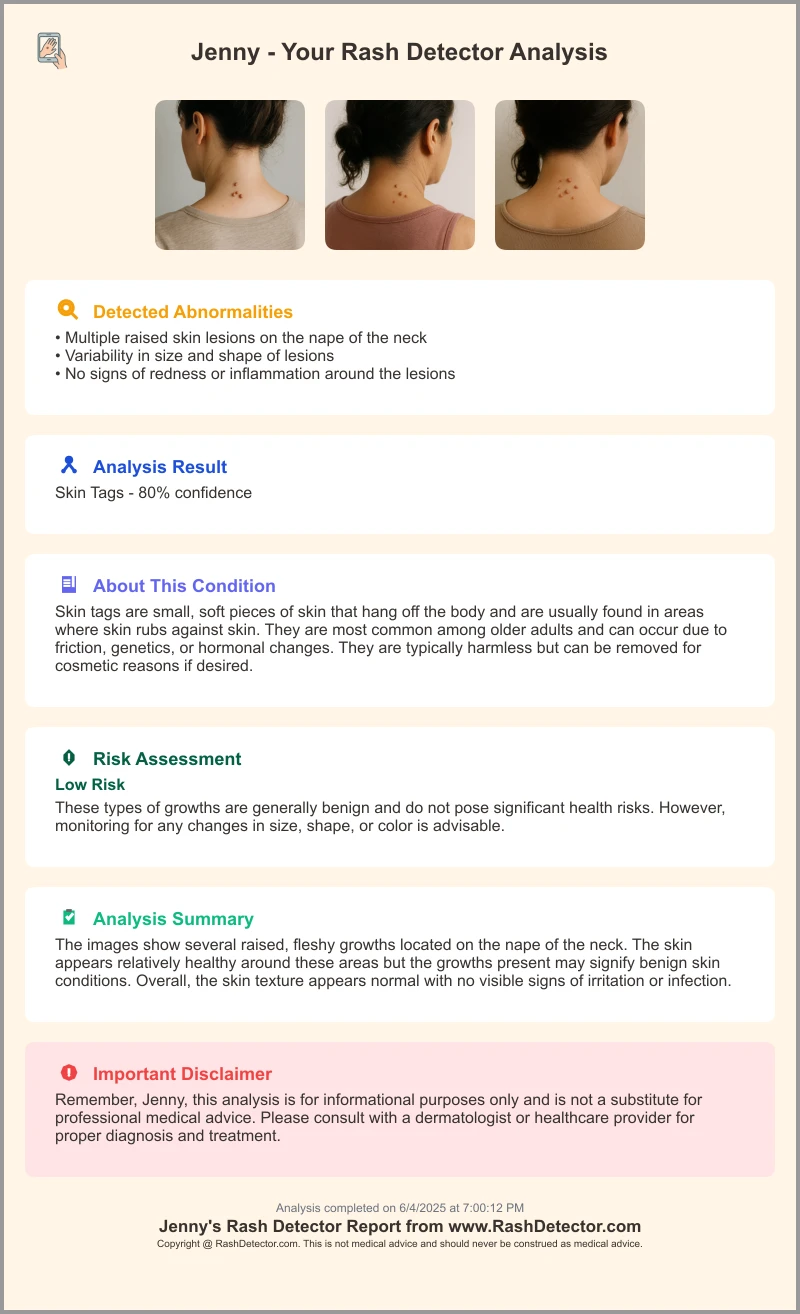

For a practical second opinion, Skin Analysis App leverages AI to analyze rash photos and generate a concise report in seconds. Here’s a sample output showcasing model confidence, risk assessment, and recommended next steps:

Background & Context: fixing app rash recognition errors

Rash recognition in apps uses AI and machine learning to analyze photos of skin. Users upload pictures and answer simple questions. The app then compares the image to a medical database and suggests likely conditions. Fixing app rash recognition errors starts with understanding how these systems work:

- Image upload and pre-screening

- Feature extraction via deep learning

- Database matching and triage questions

- Final classification or guidance

Popular tools include Aysa and VisualDx. A detailed look at the machine learning workflows behind these tools is available here. While these platforms speed up pre-diagnosis, errors degrade performance and trust. A study by the American Academy of Dermatology found misdiagnosis rates up to 69%, especially for rare rashes or darker skin tones. Such gaps highlight the need to fix app rash recognition errors at both data and algorithm levels.

Identifying the Errors: fixing app rash recognition errors

To fix app rash recognition errors, first chart the main error types and their causes:

1. Common Error Types

- Misclassification – Wrong label (e.g., eczema vs. psoriasis).

- Detection failure (false negatives) – Missing a rash entirely.

- False positives – Flagging healthy skin as a rash.

- Partial detection – Only a rash segment is recognized.

- Inconsistent results – Varying diagnoses due to lighting, angle, or skin tone.

2. Technical and Data Contributors

- Poor image quality – Blurry, shadowy, or low-resolution shots. For tips on clear clinical-grade photos.

- Unrepresentative training data – Lack of diversity in skin tone, age, or rash type (AAD study).

- Suboptimal algorithm calibration – Models overfit common rashes, ignoring rare presentations (WhichDerm app issues).

- User input errors – Incomplete symptom answers or wrong region tagging.

Real-world example: In one test, a user with a dark brown skin tone had a mild eczema patch misread as no rash. The app missed key color and texture cues because its training set lacked enough images from similar skin types.

By mapping each error type to its root cause, teams can target fixes precisely—an essential step in fixing app rash recognition errors.

Troubleshooting & Diagnostic Steps: fixing app rash recognition errors

Step 1: Enhance Logging

- Record model confidence scores for each prediction.

- Log input metadata (device type, lighting metadata, image resolution).

- Save edge-case images that trigger low confidence.

Step 2: Enable Detailed Debugging

- Trace pipeline stages: pre-processing, feature extraction, classification.

- Insert debug flags to capture intermediate tensors or feature maps.

- Spot failures when sharpening, color normalization, or cropping runs.

Step 3: Analyze Error Messages & User Feedback

- Aggregate user feedback by skin tone, age group, and lighting conditions (AAD feedback process).

- Cross-reference bug reports with VisualDx error logs.

- Look for repeated failure patterns (e.g., errors common in photos taken under yellow bulb lighting).

Step 4: Benchmark Against Dermatologist-Validated Datasets

- Use blind test sets that include images across all demographics.

- Compare app predictions to expert labels to measure misclassification rates.

- Track performance metrics: accuracy, precision, recall, F1 score.

For more on the end-to-end AI workflow, see this guide. Real-world debugging tip: In one case, adding verbose logging of JPEG compression levels revealed that heavy compression on older devices caused many false negatives. After detecting this, the team introduced an auto-recompression step to standardize input images.

Solutions for Fixing the Errors: fixing app rash recognition errors

- Enrich Training Datasets: Collect images from diverse users: various skin tones, age groups, and rare rash types (AAD dataset guidelines). Partner with dermatology clinics and incorporate user-reported corrections as supplemental labels.

- Refine and Update Algorithms: Use deep convolutional neural networks with transfer learning. Employ data augmentation: rotation, scaling, color jitter. Periodically retrain models with new data batches.

- Apply Advanced Image Pre-processing: Automatic lighting normalization, color correction using known patches, and sharpening filters to enhance edge contrast without amplifying noise.

- Implement Iterative Testing & Deployment: Roll out updates to a small user cohort (canary deployment). Monitor core error metrics in real time and use A/B testing to compare new vs. old models on key metrics.

- Conduct Rigorous Code Reviews & Peer Validation: Review data-processing pipelines for logic errors. Validate model-serving code to ensure correct preprocessing. Perform pair programming or external audits.

Case study: After enriching its dataset with 10,000 new images from dark-skinned volunteers, one app saw its eczema vs. psoriasis accuracy jump from 65% to 84%. Combining that with enhanced pre-processing removed nearly all false negatives in low-light photos. To interpret confidence and risk scores effectively, refer to interpreting confidence and risk scores.

Future-Proofing the Recognition System: fixing app rash recognition errors

- Continuous Monitoring: Build automated dashboards tracking error categories, model confidence scores, and demographic gaps. Set alert thresholds for spikes in false negatives or positives.

- User Feedback Loop: Integrate in-app reporting for suspected misdiagnoses. Ask users to confirm or correct results; feed these labels back into training.

- Alignment with Clinical Research: Subscribe to leading dermatology journals and guideline updates. Adjust model classes or add new ones when new rash variants are published.

- Scalability Planning: Use cloud infrastructure that auto-scales with growing image volume and model retraining needs. Implement model versioning and feature flags to manage iterations.

Conclusion

Fixing app rash recognition errors is vital to ensure accurate analysis, user trust, and clinical reliability. By identifying error types, following systematic diagnostic steps, applying robust solutions, and future-proofing systems with continuous monitoring and feedback, developers can transform rash recognition apps into dependable tools. Ongoing iteration—through enriched data, advanced algorithms, rigorous testing, and real-world feedback—is the path to lasting accuracy and better health outcomes. Begin applying these steps today to make your skin-rash app more precise and trustworthy. Fixing app rash recognition errors requires dedication, but the payoff in safety and user confidence is priceless.

FAQ

- Q: How do I estimate the impact of image quality on rash detection?

A: Compare model confidence scores and failure rates on a set of test images with varying resolutions, lighting, and focus. Use logging to correlate quality metrics with errors. - Q: What’s the best way to collect diverse skin-tone datasets?

A: Partner with clinics, use open-access dermatology image repositories, and integrate optional in-app feedback where users can consent to share anonymized photos for research. - Q: How often should models be retrained?

A: Depending on user volume and error trends, schedule retraining every 3–6 months or when you accumulate at least 5–10% new labeled data to avoid staleness. - Q: Can pre-processing introduce new errors?

A: Yes, aggressive filters might amplify noise or distort color. Always benchmark preprocessing pipelines with ground truth datasets and conduct A/B tests before deploying updates. - Q: How do I measure success after deploying fixes?

A: Track key metrics—accuracy, precision, recall, F1 score—on both in-house validation sets and live user data. Monitor demographic breakdowns to ensure equitable performance.