Understanding False Positive Rash Detection: Challenges, Impacts, and Solutions

Explore the challenges and solutions in false positive rash detection, impacting patient trust and healthcare. Learn to balance sensitivity and specificity.

Estimated reading time: 7 minutes

Key Takeaways

- Define false positive rash detection and its implications for specificity and sensitivity.

- Identify common causes: image quality, clinical look-alikes, algorithmic thresholds, dataset bias, and automation bias.

- Discuss limitations: data diversity, domain shift, overfitting, and skin pigmentation variability.

- Propose solutions: balanced datasets, data augmentation, calibrated models, hybrid human-AI workflows, and ongoing validation.

- Explore future directions: foundation models, multimodal integration, continual learning, and equitable regulation.

Table of Contents

- Background on AI in Medical Diagnostics and False Positive Rash Detection

- Understanding False Positives in Rash Detection

- Challenges and Limitations of AI in False Positive Rash Detection

- Mitigating Misdiagnoses in False Positive Rash Detection

- Future Directions for Reducing False Positive Rash Detection

- Conclusion

- FAQ

False positive rash detection occurs when an AI system labels a skin image as a pathological rash despite it being benign or normal. This error reflects a drop in specificity at the chosen sensitivity threshold. In AI-assisted dermatology, a false positive result arises when an AI system classifies a benign skin image or non-rash condition as a pathologic rash, leading to unnecessary biopsies, referrals, patient anxiety, and added costs. High rates of false positives can drive overtreatment of minor lesions, inflate specialty workloads, and erode trust in AI tools. Balancing sensitivity and specificity is critical: too low sensitivity misses dangerous conditions like melanoma, while too many false positives harm patients with benign outcomes. For a deeper dive into AI accuracy metrics. This post will explore the definition, key challenges—image variability, algorithm limits, data bias, and real-world generalization—and actionable solutions to reduce false positive rash detection in clinical practice.

1. Background on AI in Medical Diagnostics and False Positive Rash Detection

AI approaches in dermatology rely on deep learning methods, primarily convolutional neural networks (CNNs), deep ensembles, and transfer learning. These models are trained on labeled skin image datasets to classify lesions or predict disease severity (see workflow details). Key performance metrics include:

- Area Under the Curve (AUC): Measures overall model discrimination.

- Sensitivity: Ability to detect true rashes (true positives).

- Specificity: Ability to rule out non-rashes (true negatives).

- Confusion Matrix: Breaks down true/false positive and negative counts per class.

Common clinical applications:

- Malignancy Triage: Models separate benign from malignant lesions. A UK multicenter dermoscopy study reported AUC up to 95.8%. At 100% sensitivity, specificity fell to 64.8%, showing a high false positive burden when zero misses are prioritized.

- Inflammatory Disease Grading: Automated scoring of psoriasis severity.

- Acne Classification: Identifying lesion types and counting blemishes.

- Teledermatology Support: AI flags suspicious lesions for remote clinicians.

Real-world observation: In radiology, user studies found that incorrect AI prompts can increase clinician false positive calls—a phenomenon known as automation bias. Similar behavior is seen in dermatology when clinicians overcall lesions flagged by AI.

2. Understanding False Positives in Rash Detection

A false positive in rash detection is an AI prediction of "rash present" or assignment of a specific pathologic rash when histopathology or expert consensus confirms no disease. This inflates false positive counts and lowers overall specificity. Main causes include:

- Image Quality Issues – Poor lighting, blur, compression artifacts, and device variability can distort lesion features. Low-resolution or smartphone images amplify misclassification risks.

- Clinical Look-Alikes – Benign lesions (e.g., eczema, insect bites) and post-inflammatory changes can mimic rashes in color and texture. Models trained on narrow visual cues fail to distinguish these mimics.

- Algorithmic Trade-Offs – High sensitivity thresholds reduce missed rashes but inflate false positives. Overfitting to training data leads to weak generalization on real-world images.

- Dataset Bias & Overfitting – Models tuned on curated datasets may underperform on diverse skin tones, ages, and community settings. Under-representation of darker skin types can increase misclassification rates.

- Human-AI Interaction – Automation bias causes clinicians to over-trust AI flags, leading to more false positive referrals.

Patient Impact:

- Emotional distress and anxiety.

- Unnecessary invasive procedures (biopsies, excisions).

- Added healthcare costs.

- Increased specialty workload and appointment delays.

3. Challenges and Limitations of AI in False Positive Rash Detection

Technological Limitations (see technical challenges):

- Data Bias & Insufficiency – Training sets often underrepresent certain skin types, ages, and rare diseases, causing uneven performance.

- Lack of Diversity – Models trained on lighter skin may misdiagnose darker skin presentations, widening health disparities.

- Overfitting – High-quality, curated images differ from real-world smartphone or clinic photos, degrading performance.

- Sensitivity-Specificity Trade-Offs – Operating points chosen to avoid misses can significantly raise false positives.

- Domain Shift – Differences in lighting, capture devices, and clinical workflows shift feature distributions, increasing error rates.

- Skin Pigmentation Variability – Erythema and lesion borders look different across pigmentation levels.

- Comorbidities & Treatments – Conditions like immunosuppression or topical therapy can alter rash appearance.

- Environmental Influences – Seasonal or geographical factors change rash color and scale.

These challenges make it hard for AI to maintain low false positive rash detection rates across varied settings.

4. Mitigating Misdiagnoses in False Positive Rash Detection

Data and Model Improvements

- Diverse, balanced datasets: Collect multi-ethnic, multi-age, multi-condition cohorts with expert annotations to address underrepresentation of skin of color and rare dermatoses.

- Data augmentation & domain adaptation: Simulate real-world variability (lighting, focus, background) to improve robustness.

- Calibrated probabilities & cost-sensitive learning: Output well-calibrated confidence scores and adjust decision thresholds or penalize false positives more heavily.

- Ensembles & uncertainty quantification: Combine multiple models to average predictions and defer low-confidence cases to human experts.

Human-in-the-Loop Solutions

- Hybrid workflows: Dermatologists review AI-flagged cases, countering automation bias, and capture clinician overrides to refine model calibration.

- Teledermatology integration: Embed AI suggestions into telederm platforms and track confusion matrix metrics for continuous quality improvement.

Research Priorities

- Standardized benchmarking: Report per-disease false positive and negative rates across diverse cohorts.

- Human-AI interaction studies: Optimize interfaces, explanations, and workflows to minimize overcalling.

- Contextual data fusion: Integrate patient history, symptoms, and anatomic site to disambiguate visual look-alikes.

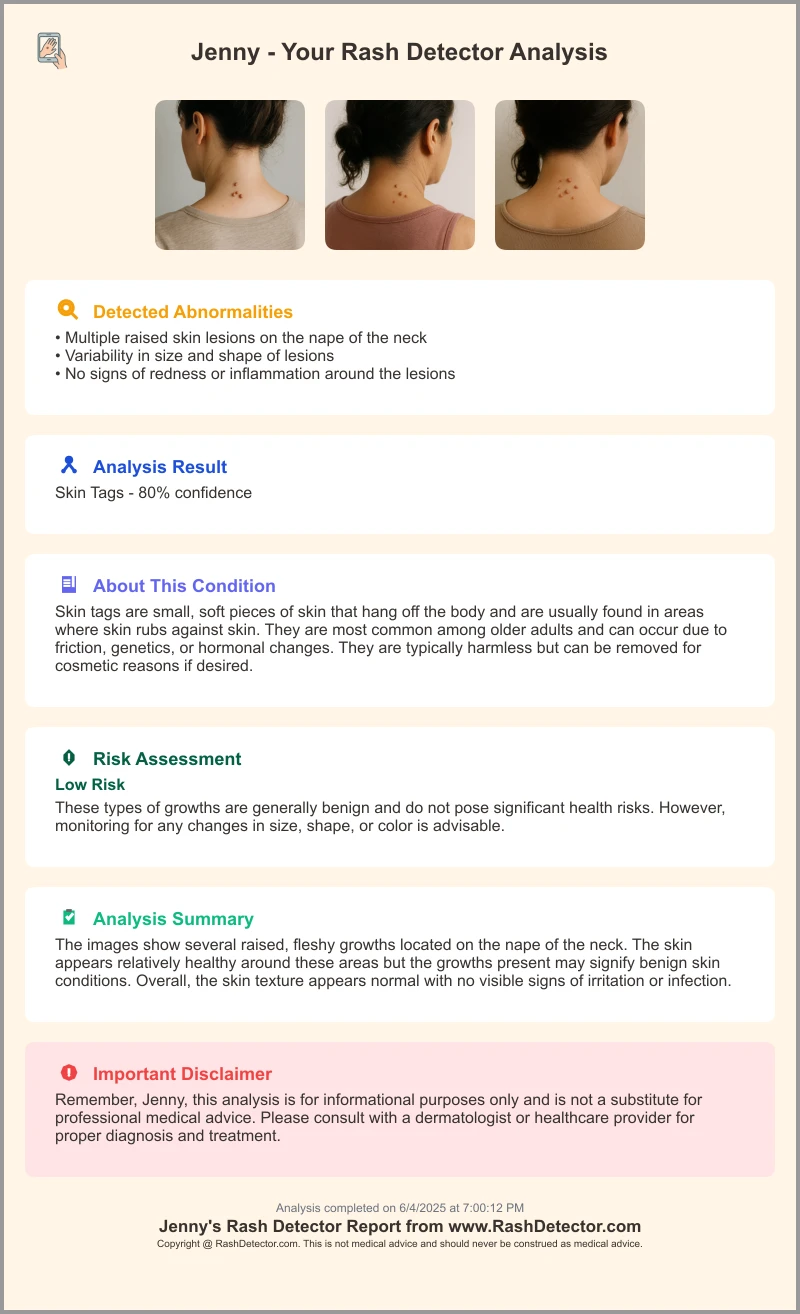

A quick look at a sample AI report from Rash Detector highlights confidence scores and risk assessments that help clinicians identify and reduce false positives.

5. Future Directions for Reducing False Positive Rash Detection

Advanced Modeling Techniques

- Foundation models & self-supervised learning: Pretrain on large, unlabeled dermatology image corpora to learn robust features; fine-tune on specific tasks to improve specificity.

- Continual learning pipelines: Update models with new real-world data to counter domain shift and monitor model drift with periodic retraining.

Multimodal Integration

- Combine images with metadata: Patient demographics, clinical history, and environmental exposures inform AI decisions and enrich feature sets to disambiguate visual look-alikes.

Ongoing Validation & Equity Monitoring

- Real-world feedback loops: Deploy external validation datasets to track false positive rash detection rates across populations and collect error reports from clinicians and patients.

- Regulatory & ethical frameworks: Establish guidelines to ensure equitable performance and transparent reporting, engaging stakeholders in continuous oversight of bias and safety.

Conclusion

False positive rash detection undermines patient trust, drives overtreatment, and strains healthcare resources. Key contributors include image quality issues, algorithmic trade-offs, dataset bias, and human factors like automation bias. To improve specificity without sacrificing sensitivity, stakeholders must collaborate on large, diverse datasets, robust model calibration, and hybrid human-AI workflows. Ongoing research should focus on standardized benchmarking, UI/UX optimization, and multimodal data fusion. By advancing accurate, reliable, and equitable AI tools, we can reduce misdiagnoses, enhance dermatologic care, and safeguard patient outcomes.

FAQ

- What causes false positives in AI-based rash detection?

False positives often result from image quality issues, look-alike conditions, high sensitivity thresholds, dataset bias, and automation bias. - How can clinicians mitigate false positive referrals?

By employing hybrid workflows, reviewing AI suggestions, using calibrated confidence scores, and integrating patient history to contextualize decisions. - What future AI techniques can reduce false positives?

Approaches like foundation models, self-supervised learning, multimodal data integration, continual learning, and robust equity monitoring can improve specificity and reduce errors.